Risk managers have been told they will need to understand the changing liability landscape as they look to the implementation of Artificial Intelligence (AI) into their operations.

Risk management association Airmic has launched a new guide to the use and risks of AI at its annual conference in Edinburgh, in partnership with broker McGill and Partners.

The report highlights the need for awareness as the liability environment changes.

“When things go wrong with AI there is the potential for a range of losses, including financial and reputational along with third party liability. For example, if an algorithm causes financial or other loss, where does the liability fall? Where does the buck stop – would it be with the business that is using it, the AI developer of the licensor?”

It continued: “The lines between product and professional liability may shift in future as regulations come online.

“Emerging regulations that focus on what companies and boards must and must not do could also impact on directors’ and offices’ liability.

“Regulation will play a significant role for liability considerations of organisations – both developers and end users – because ultimately, it is the regulatory landscape that will inform organisations in terms of what they need to do.”

Airmic said: “The technology can be used with some good objectives – and misused with some bad ones.

For these ‘dual use’ technologies it is important that organisations and those who govern them develop an understanding of what is happening and how they want the technology to be used.”

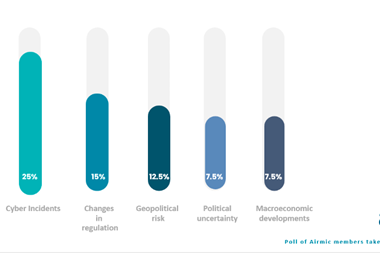

In March Airmic said it polled its membership 78% of which said that AI is now on their board’s agenda.

“AI has been flagged as an emerging risk for some time by member organisations,” it said. “Boards are discussing AI risk frameworks and risk assessments.

“Where initiatives around generative AI originate at the top, organisations are seeing clear benefits from that strategic leadership. However, boards need to be confident and informed about the outcomes of AI decisions that can affect the lives and wellbeing of people in wide-ranging ways.”

Airmic add that AI does have the ability to deliver range of significant positives.

“AI has the potential to revolutionise cyber security,” it added. “AI-powered solutions can enhance cyber resilience by providing advanced capabilities to detect, prevent, and mitigate cyber threats.”

It added: “By utilising machine learning techniques, AI can analyse vast amounts of data to identify patterns and vulnerabilities that traditional cyber security methods may miss. Managing risks should be based on the best available information – decisions should be informed and taken using reliable sources of data.

“Data should be accurate timely and verifiable with quality assurance in place.

However, organisations should be alert to biases which may distort information and lead to the wrong decisions being made.”

No comments yet