Tackling illegal content is a multifaceted aspect of risk management. Ana Jankov, Letícia Tavares and Jamie Bomford, FTI Consulting explore the steps that risk professionals must take to ensure compliance with the EU’s Digital Services Act

The European Union’s Digital Services Act (DSA) will begin to take effect in August for the largest online platforms and search engines, with a ramp-up for various other digital service companies into February 2024.

This landmark law will introduce new rules around transparency and assessments, focusing on the removal of illegal content and effective protection of users’ rights online.

A variety of digital providers offering services in the EU, whether they are established in the EU or other jurisdictions, will be required to follow the new rules pertaining to content moderation, and risk managers will need to be aware of and ready to support necessary changes.

What is the Digital Services Act and how does it affect risk managers?

The DSA presents measures that can be seen as a step towards harmonising the efforts to tackle illegal activity online by providing assurance and rights to users as the internet evolves.

Under the DSA, organisations will need to raise their scrutiny levels when operating in today’s complex digital ecosystem.

Tackling illegal content is not always straightforward and is often a multifaceted aspect of risk management.

This spans operationalising the ability to receive illegal content alerts and identifying actual illegal content, to understanding who is behind it (for instance in the case of fake ad agencies) and bringing down such illegal content, as well as cooperating with the authorities.

This is where the DSA rules will provide clarifying guidance.

Which companies are impacted by the act?

Although the original policy rationale of the DSA was to regulate Big Tech companies relating to their ownership and dissemination of vast amounts of data and significant network effects, the legislation evolved to encompass a wider range of digital services.

In the final iteration of the law, size is not necessarily a decisive factor.

Therefore, to avoid inadvertently violating the law as it takes effect, all providers of digital services should carefully assess whether the DSA applies to them.

The DSA was installed in November 2022, with highly publicized emphasis on the largest online platforms and search engines. For those large players (specifically, those that report more than 45 million active average monthly users in the EU), the DSA will become applicable in August 2023.

As that date nears, there is an impending shift of attention to the rest of the entities in scope in the digital services economy, for which the DSA will become applicable 17 February 2024.

How risk managers should prepare for the Digital Services Act

Whether an online platform is just now past the stage of publishing its number of users, or is yet to assess the next steps in implementing the DSA, the requirements may be challenging, as they may entail embedding measures at wide organisational and business levels, as well as enhancing technical capabilities.

In view of that, there are three main steps risk management teams within digital service providers should take to begin preparing for possible regulation under the DSA. These include:

1. Know whether the DSA applies to your company

This regulation applies to providers of a variety of digital services which fall under the definition of “intermediary services,” including:

- Mere conduit services. Enable transmission of information over a communication network or provide access to a communication network.

Examples include internet exchange points, wireless access points, virtual private networks, DNS services and resolvers, top-level domain name registries, certificate authorities that issue digital certificates, Voice Over Internet Protocol (VoIP) and other interpersonal communication services. - Caching services. Enable transmission of information over a communication network, involving automatic, intermediate and temporary storage of that information for the sole purpose of making the information’s onward transmission to other recipients more efficient.

Examples include content delivery networks, reverse proxies or content adaptation proxies. - Hosting services. They consist of the storage of information provided by, and at the request of, a recipient of the service. Examples include cloud computing, web hosting, paid referencing services or services enabling sharing of information and content online.

Online platforms form a sub-type of hosting services that, in addition to storing, also disseminate information to the public. Examples include social networks, content-sharing platforms, app stores, online marketplaces and online travel and accommodation platforms.

Note that mere technical accessibility of an intermediary service from the EU does not, in itself, trigger the application of DSA.

DSA applies only to those providers of intermediary services that have a “substantial connection” to the EU, which exists if the provider enables the use of intermediary services in the EU:

- has an establishment in the EU; or

- targets its activities towards one or more EU member states (e.g., by making an application available in the relevant national application store, by providing local advertising or customer service in a language used in an EU member state); or

- has a considerable number of recipients in one or more EU member states relative to the population of that state or states.

2. Know which DSA provisions apply.

Different DSA obligations attach to different types of intermediary services. Knowing the exact type of intermediary service plays a vital role in determining the applicable DSA provisions.

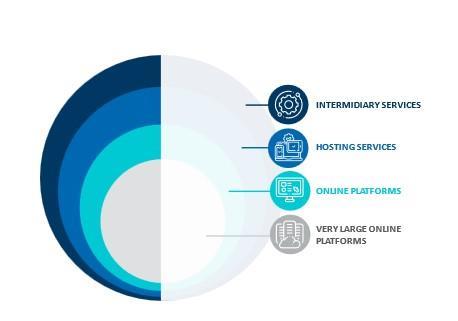

DSA establishes a tiered approach when it comes to the scope of obligations.

All intermediary service providers are bound by the basic set of obligations that includes:

- Establishing a single point of contact for communication with the supervisory authorities, as well as a single point of contact for communication with the recipients of the services;

- Establishing a legal representative in the EU (for providers of intermediary services which do not have an establishment in the EU);

- Describing in the provider’s terms and conditions any content-related restrictions, including information on content moderation policies, procedures, measures and tools; and

- Publishing an annual report on content moderation, including information such as the number of orders received from the authorities and describing any content moderation carried out at the providers’ own initiative.

The DSA then gradually adds cumulative obligations for each tier of intermediary services, in the following order: (i) hosting services (including online platforms regardless of the size); (ii) online platforms (excluding micro and small enterprises); (iii) online platforms allowing consumers to conclude distance contracts with traders (excluding micro and small enterprises); and (iv) very large online platforms and very large online search engines.

Those additional obligations include more detailed transparency reporting obligations and putting in place a notice mechanism to allow individuals and entities to flag potentially illegal content.

Wide-ranging risk assessments to identify potential systemic risks, such as negative effects for the exercise of fundamental rights (including risks stemming from algorithmic systems) and independent annual audits are obligations of very large online platforms and very large online search engines.

Establishing the type of intermediary service requires looking at multiple and often inter-connected digital features wholistically.

For example, distinguishing between online platforms and other types of intermediary services is not always clear-cut.

According to the DSA, services should not be considered as online platforms where dissemination to the public is merely a minor and ancillary feature linked to another service.

”Risk management teams will need to coordinate with stakeholders across the organisation to ensure that each intermediary service is covered by an appropriate compliance framework”

Recital 13 of the DSA provides an example of the comments section in an online newspaper, which is unlikely to represent an online platform as it is ancillary to the main service (the publication of news).

On the contrary, the same recital states that the storage of comments in a social network should be considered an online platform service where it is clear that it is not a minor feature of the service offered, even if it is ancillary to publishing the posts of recipients of the service.

Another challenge organisations face is that different sets of rules can apply to a single organisation providing multiple types of intermediary services.

An organisation may provide several types of digital services, either simultaneously or successively, as a result of gradually expanding the scope of services.

As different DSA obligations attach to different types of intermediary services, risk management teams will need to coordinate with stakeholders across the organisation to ensure that each intermediary service is covered by an appropriate compliance framework.

This will require an assessment of all segments of the business potentially qualifying as intermediary services, development of compliance functions, and a watchful eye on risks and requirements relating to the future development of services that come under the DSA.

3. Deploy a tailored compliance framework.

In order to comply, organisations are working to level up their understanding of processes, systems and governance in place.

Assessing progress in the DSA implementation journey or how it applies to the business is the starting point.

To that end, risk managers and their organisational partners in legal, privacy and compliance functions should perform a readiness assessment to identify the intermediary services in scope and gaps against the applicable DSA provisions.

Mapping out existing and foreseeable risks, including any risks stemming from relevant algorithmic systems already in operation or development, can help drive content moderation efforts in the right direction.

”Businesses should assess the possibility and benefits of voluntary and self-initiative content monitoring.”

Accordingly, organisations should develop compliance and risk management methodologies, taking into account any content moderation policies or methods that they might already apply.

Although under DSA there is no general obligation to monitor the information which providers of intermediary services transmit or store, businesses should assess the possibility and benefits of voluntary and self-initiative content monitoring.

This practice contributes to greater transparency levels, provides users a safer online environment and reduces reputational risk, while requiring an organisation’s readiness to expeditiously remove or disable access to any illegal content discovered that way.

For active monitoring, organisations can leverage machine learning tools that can analyse content.

”Organisations should also consider leveraging digital risk assessment tools for DSA compliance purposes”

The algorithms within these tools can identify potential issues early on and automatically flag them for analysis.

Organisations should also consider leveraging digital risk assessment tools for DSA compliance purposes, accompanied with automation functions where possible, which can help optimise recurring processes such as transparency reporting.

Putting in place periodic follow-up assessments is crucial to ensure that organisations adapt to any changes that may occur over time.

This can be the case, for example, if an organisation introduces new intermediary services triggering new or expanded obligations, or if the number of active users of a platform increases, causing a potential shift into the “very large online platform” category with additional obligations.

4. Carry out scenario testing

Finally, once a robust compliance and preparedness framework is in place, it is vital to test the effectiveness of processes and teams’ readiness to react to the range of operational and reputational scenarios that could arise as a result of the DSA.

Apart from fines for non-compliance, companies in scope should not underestimate the reputational risk either.

Although the reputational consequences of missteps under the DSA are as yet unknown, in the context of widespread conversation around the DSA to date it is expected that scrutiny of enforcement and sanction under DSA will lead to widespread media coverage and popular attention.

“Preparing ahead of any potential real-life scenario will help embed resilience and organisational understanding of both reporting and compliance processes”

Risk managers can develop institutional resilience against potential consequences by carrying out mock scenario exercises which test and train its processes, plans, and people.

The scenarios will be informed by reputational risk mapping to identify hypothetical scenarios —either higher-likelihood (e.g., unaddressed illegal content allegations from grassroots advocacy groups) or high-impact (EU-mandated dawn raid) — for which there is significant value in testing responses.

As the DSA turns from political debate to practical implementation, scrutiny of platforms and expectations for enforcement by regulators will increase attention and discussion around how companies are adapting to the requirements.

Preparing ahead of any potential real-life scenario will help embed resilience and organisational understanding of both reporting and compliance processes and requirements.

No comments yet